While taking care of my newborn son, I've had a lot of time on my hands. A chunk of that time has been on puzzles, and a chunk of it has been on thinking about algorithms to solve the puzzles quickly.

The New York Times has a pretty good puzzle called the Spelling Bee. I've been thinking about that one in particular, and I've found it to be a neat little exercise. I've used it as a way to force myself to write some actual, semi-practical Rust code for the first time, too.

Spelling Bee puzzles

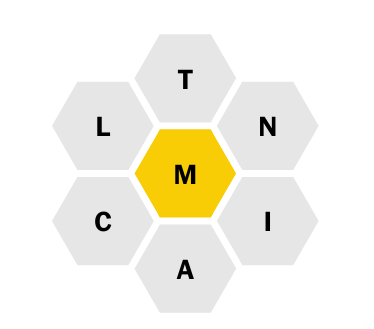

A Spelling Bee puzzle looks like this:

Your goal is to spell words using the letters in the little hexagonal grid. Valid words have a few rules:

- They must be at least 5 letters long (or 4, if you're playing on the app).

- They must only use letters found in the grid.

- They must always use the center golden letter at least once.

It's fine for a word to use letters multiple times. So, for example, some valid words for the puzzle above, if you're on the app:

almanacanimalmintinimical

Finally every spelling bee has at least one "pangram": a word that uses every letter in the grid. In this one, anticlimactic is a pangram.

So, that's the puzzle. How would you write a computer program to solve it?

The naive answer: brute force with a dictionary

The first thing that comes to mind is our baseline. Brutally stupid. Load up a dictionary, like from /usr/share/dict/words or whatever, and rip through it, checking every word. Our algorithm is this:

set result = []

for each word in dictionary

if is_valid(word):

append word to result

return result

Again, doing the first thing we can think of to define is_valid, we can just check each character one-by-one in a candidate word to see if it's valid:

found_center_letter = False

for letter in word:

if letter == center_letter:

found_center_letter = True

continue

if letter not in puzzle:

return False

// If we got here, all letters are in the puzzle, so

// all that's left is the center.

return found_center_letter

Let's translate that into some Rust. I should say now that I have written approximately zero Rust before. This was a learning exercise; I'm certain I have committed stylistic faux pas and performance blunders and other heresies 1. But I think this gets the point across:

struct Puzzle {

center_letter: char,

outer_letters: [char; 6],

}

/// NaiveSolver solves a puzzle by iterating over every word in a dictionary,

/// and seeing whether that word is valid.

struct NaiveSolver {

words: Vec<String>,

}

impl NaiveSolver {

fn new(word_list: Vec<String>) -> NaiveSolver {

NaiveSolver {

words: word_list

.iter()

.filter(|w| w.len() >= MIN_LENGTH) // A const set to 4.

.cloned()

.collect(),

}

}

fn word_is_valid(&self, puzzle: &Puzzle, word: &str) -> bool {

let mut has_center = false;

for c in word.chars() {

if c == puzzle.center_letter {

has_center = true;

} else {

if !puzzle.outer_letters.contains(&c) {

return false;

}

}

}

return has_center;

}

fn solve(&self, puzzle: &Puzzle) -> Vec<String> {

let mut result: Vec<String> = Vec::new();

for word in self.words.iter() {

if self.word_is_valid(puzzle, word) {

result.push(word.to_string())

}

}

result

}

}

Theoretically speaking, this ain't an especially fabulous algorithm at first glance. In the worst case, it needs to check every letter of every word in the dictionary, but at least it's easy to see it's correct.

How does this thing do in practice? I've written some rudimentary benchmarks and get numbers around 80ms per spelling bee puzzle on my 2019 Macbook 2.

That's probably fast enough for any normal purpose. Solving the bee 14 times per second is almost certainly good enough.

But can we do better?

It sure seems like we should be able to. For one thing, this naive solver wastes a ton of time on entire regions of the dictionary that just have no chance of succeeding. Like, if A isn't in the puzzle, you wouldn't open the dictionary to page 1 and check every word that starts with A; you can skip past to B straight away. If B isn't in the puzzle, skip on as well. The point is that you should be able to just check words in a section that start with a letter from the puzzle.

This intuition leads to the first interesting solution.

The computer science answer: build a trie

So, we could index all the words in the dictionary by their first letter:

{

"a": ["aardvark", "aardvarks", "abaci", ...],

"b": ["baa", "baas", "babble", ...],

"c": [...],

...

"z": ["zanier", "zany", ...]

}

Then, the algorithm looks a little cleverer. Instead of searching every word in the dictionary, we just search ones that start with a letter from the puzzle.

But we can apply this strategy again for the second letter. If A is in the puzzle but B isn't, we can skip all the words that start with AB. We get something like:

{

"a": {

"a": ["aardvark", "aardvarks"],

"b": ["abaci", "aback", "abacus", ...],

"c": ["acacia", "academician", ...],

...

},

"b": {

"a": [...],

...

},

...

}

You see where this is going: we can build this all the way down, for an entire chain of letters forming words. For example, let's take a really small dictionary, with the words

daddaftdamdamndog

We could build a trie that looks like this:

+-+

|D|

+++

|

+-+---+---+-+

|A| |O|

+-+ +-+

| |

+-+---+---+-+ +-+

|D| |F| |M| |G|

+-+ +-+ +-+ +-+

| |

| |

+-+ +-+

|T| |N|

+-+ +-+

This is, theoretically, much more efficient because it lets us only investigate a subset of words. We can choose to only explore the trie by only following paths through it that use letters from the puzzle. All valid paths are apparently valid words.

In Rust, here's how I chose to implement this (again, buyer beware, your warranty is void if you copy this, etc etc):

impl TrieSolver {

pub fn new(word_list: Vec<String>) -> TrieSolver {

let mut root = TrieNode::new(false);

for word in word_list.iter().filter(|w| w.len() >= MIN_LENGTH) {

root.add(word.clone());

}

TrieSolver { dictionary: root }

}

fn solve(&self, puzzle: &Puzzle) -> Vec<String> {

self.dictionary.find_words(puzzle, false, "")

}

}

struct TrieNode {

is_word: bool,

children: HashMap<char, TrieNode>,

}

impl TrieNode {

fn new(is_word: bool) -> TrieNode {

TrieNode {

is_word: is_word,

children: HashMap::with_capacity(26),

}

}

fn add(&mut self, mut word: String) {

let first_char = word.remove(0);

// Already have a child there, so add remainder of word.

if let Some(child) = self.children.get_mut(&first_char) {

// No word remainder, so we should register child as a complete word.

if word.len() == 0 {

child.is_word = true;

} else {

child.add(word)

}

} else {

// No child, so make a new one

let mut child = TrieNode::new(word.len() == 0);

// More to go

if word.len() > 0 {

child.add(word);

}

self.children.insert(first_char, child);

}

}

/// Finds all the words that match a puzzle for the given graph. Used

/// recursively, with state captured in the 'path' and

/// 'center_letter_found' variables.

fn find_words(&self, puzzle: &Puzzle, center_letter_found: bool, path: &str) -> Vec<String> {

let mut result = Vec::with_capacity(TYPICAL_RESULT_SIZE);

if center_letter_count > 0 && self.is_word {

result.push(path.to_string());

}

let mut subpath = path.to_string();

if let Some(child) = self.children.get(&puzzle.center_letter) {

subpath.push(puzzle.center_letter);

let child_words = &mut child.find_words(puzzle, true, &subpath);

result.append(child_words);

subpath.pop();

}

for letter in puzzle.outer_letters.iter() {

if let Some(child) = self.children.get(&letter) {

subpath.push(*letter);

let child_words = &mut child.find_words(puzzle, center_letter_found, &subpath);

result.append(child_words);

subpath.pop();

}

}

result

}

}

This is an awful lot more code, but it's still frankly not that much. It's 80 lines, and there really isn't that much trickiness to it3. It certainly isn't very frugal with memory - it allocates a new string 'subpath' for every node in the graph!

Anyway, how does it do? In my benchmarks, it does about 40x better, at about 2ms to solve each puzzle. On the other hand, it takes way longer to start up - we need to construct that big trie, which takes a lot of computation. The naive solver easily outpaces the trie solution if you're just trying to solve one Spelling Bee; the trie only overtakes it after solving dozens of puzzles.

But can we do better?

I had a feeling we could do better. My intuition here was a lot murkier, but it seemed to me that this was an awful lot of pointer lookups and allocations for traversing the trie. Sure, the complexity of the solution is very nice, but the so-called "constant factor" here - that is, the time spent on each elementary operation - is high.

In addition, there seems to be extra time spent looking at all the different letters in a word. Something that bugged me about the old is_valid function was that it checked every single letter. We don't need to check every letter of a word to know if it's valid, we just need to test the set of characters that are used in that word. This reduces many words - abaci becomes abci; abracadabra becomes abcdr. Is there some way to use these ideas?

The bit-fiddler answer: bitmasks

I'm not sure exactly how I thought of this idea, but it came to me around 4:45AM while changing my son's diaper.

The idea is to assign each word a bitmask. We can use just a few bitwise operations to tell whether the word is valid. Then, we can go back to our iterative, rip-through-the-dictionary approach. Even though we waste time on lots of invalid words, we waste very little time on each one; maybe this can beat the trie.

What is this bitmask? It's a 32-bit value, a u32 in Rust. We assign a to the first bit, b to the second, c to the third, and so on. The bitmask of the word has a '1' in a letter's assigned bit if it contains that letter. Some examples may help. Here's apple:

10001000000100010000000000000000

abcdefghijklmnopqrstuvwxyz______

And cosmopolitan4:

10100000100111110011000000000000

abcdefghijklmnopqrstuvwxyz______

And inimical:

10100000100111000000000000000000

abcdefghijklmnopqrstuvwxyz______

Now, if we have all the bitmask of a word, and we have a puzzle, we can check whether the bitmask is valid for the puzzle in just a few bitwise comparisons.

Suppose the puzzle is the one pictured above, A NIMLCT. To check whether a bitmask has the center letter, A, we can just do a bitwise AND with the bitmask of A. A positive value means that yes, the word has the center letter.

10100000100111000000000000000000

AND

10000000000000000000000000000000

=

10000000000000000000000000000000

Now to check that it only uses valid letters, we need to be slightly more clever. It's valid if it does not use any letters outside the puzzle. To check that, we compute the set of letters outside the puzzle by inverting the puzzle letters' bitmask:

^10100000100111000001000000000000

=01011111011000111110111111111111

Then, we OR that with a given word's bitmask. If the result is exactly zero, then great! None of those letters were present in the word. This works for inimical's bitmask, so yes - that's a valid word:

10100000100111000000000000000000

AND

01011111011000111110111111111111

=

00000000000000000000000000000000

The code for this is pretty simple too, in Rust, although it does have a long stretch for the bitmask mapping of ASCII lowercase letters, so I'll just link to it for the full code - here's the important bit:

impl Solver for BitmaskSolver {

fn solve(&self, puzzle: &Puzzle) -> Vec<String> {

let center_letter_mask = BitmaskSolver::bitmask_letter(&puzzle.center_letter);

// forbidden_letter_mask has 1 for every letter which must *not* be

// used. We compute it by ORing together all the allowed words, and then

// inverting.

let mut forbidden_letter_mask: u32 = center_letter_mask;

for letter in puzzle.outer_letters.iter() {

forbidden_letter_mask |= BitmaskSolver::bitmask_letter(letter)

}

forbidden_letter_mask = !forbidden_letter_mask;

let mut result: Vec<String> = Vec::with_capacity(TYPICAL_RESULT_SIZE);

for mask in self.bitmasks.iter() {

if (mask.mask & center_letter_mask != 0) && (mask.mask & forbidden_letter_mask == 0) {

result.push(mask.word.to_string());

}

}

result

}

}

Now, this is kind of in a weird spot, theoretically. It should be faster than the naive brute-forcer, because it doesn't need to check every letter, but it still needs to check every word. But that check should be very fast. How does it do?

I get about 5ms. So that's a little worse than half the speed of the trie solver, but still much faster than the naive solver. The problem really is that we spend a lot of time doing these bitwise operations on tons of words that can't possibly work.

Can we do better?

It turns out we can - we can do a lot better. Check out part 2.

-

In particular, I'm very insecure about my use of

Vec<String>. But I'm sure there are more problems. ↩ -

I get numbers closer to 600μs on my 2019 Lenovo Carbon X1 with Ubuntu 20.04. I think that's down to the much, much smaller

/usr/share/dict/wordsfile. It would be interesting to explore this relationship. ↩ -

You might notice a bit of premature cleverness in

with_capacityusage. I think I may have just gone a little crazy there; Rust's official docs recommend this sort of thing but I really doubt it matters terribly much at the volumes we're dealing with here (~50 words in the result set). ↩ -

It occurs to me that we can skip any word with more than 7

1values in it. I didn't implement this, but it might be a good fast way to trim the dictionary! ↩