Last week, the US's National Science Board capped its funding for giant telescopes at $1.6 billion. This may sound like a staggering amount of money - and it is - but it's not likely enough to build the next generation of telescopes, which are estimated to cost $3 billion or more.

I think that the pursuit of these ever-larger telescopes is a mistake, and one that we'll look back on as a bit quaint. I think the future is instead going to lie in building large systems of many small (0.5m-1m) telescopes, rather than in building 10m+ observatories. This scales better on cost, is more scientifically flexible, is easier to iterate upon and upgrade, and takes advantage of many recent technological and industrial developments.

Age of the mega telescope

The last hundred years of astronomy have been about building bigger and bigger telescopes. In 1900, telescopes larger than 2 meters in diameter were difficult to imagine; today they are commonplace and even considered small. Enormous 10 meter telescopes at Mauna Kea and the Canaries are now in use; these devices are so enormous that entire mountaintops need to be shaved to make room for them.

This trend is continuing over the next decade with the planned construction of the Giant Magellan Telescope, the Thirty Meter Telescope, and the European Extremely Large Telescope, designed to have an enormous 39.3 meter aperture.

There is good reason for this. Telescopes, the saying goes, are just "photon-collects buckets." To a first approximation, all you need to know about a telescope is it's diameter to know how many photons it collects, funnelling them down to a sensor. The aperture area - and thus the number of photons collected - grows as a square of the aperture diameter: a telescope which is twice as large collects four times as much data.

These gigantic telescopes have been incredible instruments, and we live in a "golden age of astronomy" in part because of their magnificent power 1.

But they've also been incredibly expensive and difficult projects. The Giant Magellan Telescope and Thirty Meter Telescope are estimated to require $3 billion to build - and that's just the estimate.

One reason the costs scale up quickly is that engineering challenges get more and more difficult. As a telescope's primary mirror gets wider, it gets heavier. Support and moving this massive object requires serious structural engineering work. The enclosure surrounding the telescopes needs to be bigger, scaling as a cube of the diameter. The construction project itself gets far more complex: a two-lane road is about 5 meters wide, so just transporting a 10 meter mirror becomes a serious endeavor.

We must go smaller

While telescopes are getting larger, other technologies are getting conspicuously smaller. Computers have shrunk from room-sized mainframes to fit in your pocket. Digital cameras, once eye-wateringly expensive and bulky, are now mass-produced commodities with pixels measured in microns.

In the world of telescopes, manufacturing techniques and tools have advanced considerably, bringing telescopes in the 0.5m to 1.0m range into the consumer world. You can now buy a PlaneWave 0.5 meter telescope "off the shelf" for $56,000, including a full optical train and mount. You can even get a 1 meter telescope for a cool $575,000 as a complete unit with impressive performance.

At the same time, processing of astronomical images has entirely shifted from photographic plates to software. Astronomers have built sophisticated suites capable of "stacking" images - that is, taking multiple exposures of the same region of the sky, possibly over many nights, and then combining them into a single combined image.

These developments point toward an alternative future for astronomy. Instead of building single enormous telescopes, we could be building fleets of many small telescopes. We could sum the images together from all those telescopes to get incredibly deep images, or we could have that fleet "fan out" and scan the entire sky simultaneously, or we could do some combination.

The math on this checks out: stacking images combines their photon counts, so the fleet of telescopes has an effective aperture area which is the sum of its components' areas. This means that an equivalent to the 10.4m Gran Canaria Telescope, which cost $180 million and took 9 years, could be built with 430 PlaneWave 0.5m telescopes, which would cost about $54 million, including enclosures and cameras2. This cost comparison isn't totally fair: the money for the GCT covered more than the physical equipment. It also covered management of the project and software efforts. But those couldn't possibly be over 70% of the total cost of the GCT; saving that much on the hardware really does matter.

The reason the math works is that while photon-collecting power scales as the diameter squared, the cost of the telescope grows faster than that. Estimates vary between a power of 2.3 and 2.6, but either way, small telescopes can win out now that images can be added across multiple apertures.

Software eats the universe

This shift towards small telescopes would fundamentally change the problems of telescope engineering. It would move away from structural and mechanical engineering and towards software.

This is an appealing switch. It's much easier to make incremental improvements on software, and astronomers could more easily ride the wave of technological progress that's funded by Silicon Valley megacorporations. Tech giants need to process tremendous volumes of data, which has pushed so many advances for software (and computing hardware) that can be re-applied to astronomy work. There aren't many analogous engines powering development of, say, adaptive optics stabilizers that can precisely deform enormous mirrors dozens of times per second.

There are industrial waves to ride in some areas of course - most notably in cameras. CMOS camera technology has improved by leaps and bounds, largely motivated by their use in iPhones. Those same chips could be used as the sensors on telescopes too at pretty attractive prices.

Mainframes vs datacenters

There is a strong analogy here to the computing revolution's transition away from mainframe computers. For decades, computers were colossal devices weighing tons. Even when "minicomputers" became available, the trends was still towards using enormously powerful single machines. In academia, supercomputers continued to be emphasized well into the 1990s and early 2000s.

Modern datacenters do not work this way. Google pioneered using cheap, off-the-shelf commodity computers, stitching them together into an enormous fabric of many simple and replaceable parts. This has proved to be extremely successful, and the basic model has fueled the cloud computing boom of the last ten years.

Something similar is due for astronomy. A design based on many small telescopes has so many operational advantages over a single large telescope. Single component telescopes can be removed for repair, or replaced outright. They can be shipped offsite to be maintained, rather than bringing the maintenance crew to a mountain top in the Atacama desert or Hawaii. The design can be upgraded in-place, or incrementally, and experimental new ideas can be added gradually to the system.

Better ramp-up

To a funding agency, this could be a much more attractive proposition. Rather than requiring an enormous block of billions of dollars, the system could be built incrementally.

Results would be available much more quickly, since you'd largely be using commercially available, off-the-shelf components. You'd get to the science much more quickly.

Compare this to the long construction, commissioning, and operating phases for a large telescope. These can take many years each, and require different teams. The hand-off processes between, say, commissioning and operations can be mind-achingly slow and careful as a whole new team needs to get up to speed in understanding a new, unique, one-of-a-kind device.

If we ran telescopes like datacenters instead of mainframes, you'd view this more like adding capacity. In the jargon of the tech industry, you'd be managing cattle instead of pets.

Better science

In addition, this is easier to adapt to science operations that target multiple use cases. You can try new ideas, or new techniques, using a subset of the meta-telescope.

You could flexibly choose to point many of the telescopes at just one thing, to go for depth. Or you could spread them out for breadth, capturing a larger region of the sky, like surveying telescopes. Uniquely, you'd be able to get both of these observing strategies at once.

More exotically, you could devote a fraction of your observational power to rapid follow-up, investigating (for example) supernovae or interesting solar system phenomena like near Earth objects. With a mega-telescope, you'd need to point the entire $3 billion beast in one direction, but with many little telescopes you could split the view.

You could also use different filters on different individual telescopes, giving a multi-color simultaneous view - something that is extremely difficult to do with today's infrastructure, and which is of particular interest when studying transients that demand fast follow-up.

The many little telescopes could also have a longer lifetime of supporting many groups. Time can be allocated in slices at a much more granular level than with an enormous telescope.

Better resilience

Using many little telescopes lets this system be distributed, which means it can be more resilient to failures. These happen more often than you might think; eruptions at Mauna Loa have knocked out PANSTARRS for months when lava crossed the only access road and power was cut off. When you only have one enormous, expensive observatory, this is an extremely expensive downtime event. If you estimate 15,000 days (about 40 years) of lifetime for one of those $3 billion telescopes, then each day cost $200,000. Months of downtime is tens of millions of wasted dollars.

But with many little telescopes, you don't need all your eggs in one basket. Sure, you might lose even 20% of your overall capacity, but the telescope still works and can be generating lots of useful scientific data.

Similarly, by distributing work across more components, repairs no longer require the entire device to be taken offline; instead, pieces can be swapped out piecemeal. When working on real-time data pipelines, I was pretty astonished by the frequency of maintenance for telescopes. Cooling systems seem to break all the time - certainly at least a few lost weeks per year, sometimes a few months. Telescope operators try to get machines to limp along during their dry seasons, holding out for cloudy months to stuff all the repairs and upgrades in. A distributed system, though, would permit a much more flexible maintenance schedule.

All this resilience is important for maximizing photons per dollar, but it's also important because some science, like studying periodically varying sources, can only be done with frequent observation.

Who is doing this now?

So, many little telescopes have lots of advantages. Who is doing this now?

This idea is not really totally novel. You can see some of the ideas being developed over time.

Way back in 2013, the "Dragonfly Telephoto Array" strapped a bunch of Canon telephoto lenses - you know, the kind that is used in sports photography - to commercially available cameras. They targeted a narrow scientific case, looking for low surface brightness surfaces like broad, dim nebulae, but were very effective in that case.

The Condor Array Telescope in New Mexico, came online in 2023. It uses amateur telescopes attached to a simple commercially-available mount, and emphasizing using refracting telescopes since they're cost effective.

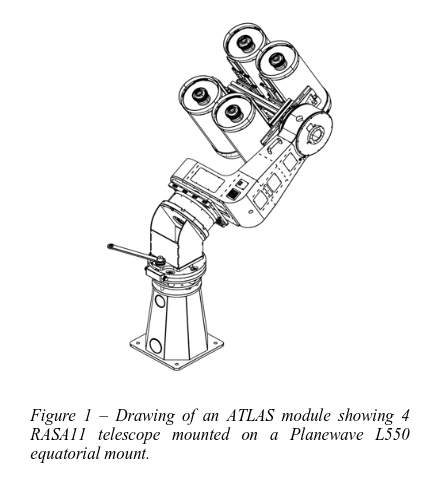

ATLAS is perhaps the closest to the many-little-telescopes idea, because it actually has five separate observing sites, spread between Hawaii, Chile, South Africa, and the Canary Islands. The most recent installation, ATLAS-Teide, was built in 2023 using parts you could mostly buy on Amazon: Celestron RASA 11 telescopes, and QHY600PRO CMOS cameras.

All of these projects were relatively low-cost, and small scale. I expect we'll see them grow over time, adding capabilities.

The challenges coming

The many little telescopes paradigm obviously is not without problems. Project management and logistics for multiple sites with dozens (or hundreds, or even thousands) of telescopes will be very different from current observatory operations. Software that can efficiently and accurately stack images from multiple observing sites, possibly with entirely different optical trains, will need to be developed.

But there are just so many advantages that I can't see any other future really panning out. We're hitting the technical limits for huge telescopes, and as we continue probing deeper and deeper into the night sky, I think we'll be forced to adapt to totally new designs for our most powerful astronomical instruments.

It'll be an exciting few decades to see what comes.

-

Computing and multi-wavelength observation also deserve a lot of credit. ↩

-

This assumes $54,000 for the telescope itself from PlaneWave, $20,000 for an enclosure, and $50,000 for a camera, totaling $124,000 per component telescope. The enclosure number appears to be reasonable from looking at hobbyist telescope domes, and the camera is based on the cost of the QHY411 scientific CCD's cost. It's all a rough estimate; the dewar for the camera might add significantly to cost (but hopefully shouldn't since it's relatively small), and volume discounts might lower the bill. The point is that this is a cost-competitive approach. ↩